Website A/B Testing for CRO: Quick Start Guide 2023

Along your journey of seeking ways to optimize your website’s conversion rate, it’s safe to say that many times of A/B testing are expected.

Well, what’s the CRO testing? Why you should start CRO A/B testing for your website? How to effectively run an A/B test on your website?

We answered all these questions in this post. Let’s start with a definition.

Contents

What is an A/B Test for CRO?

When it comes to conversion rate optimization (CRO), A/B testing (also known as split testing or bucket testing) usually means testing factors that affect the conversion rate of a website: copy, header, images, design, calls-to-action, and layout, just to name a few. It is one of the most widely used techniques for ensuring that your website is set up to help guide users through pages and convert at crucial touchpoints.

The Difference Between CRO and SEO A/B Testing

A/B Testing for CRO is quite different from A/B testing for SEO. In this part, we’ll aim to shed light on each technique, how they differ, and what they’re used for.

1. Aims and Objectives

Although CRO and SEO testing both use A/B testing, their goals – and how they are carried out – are very different. CRO aims to improve the conversion rate once visitors have reached the site; SEO focused testing aims to increase the number of visitors to the site, by implementing technical changes that will improve the website’s position in the SERPs.

2. Controls and Variants

In CRO A/B testing, two versions of the same page are set up and run live at the same time, and human users are redirected to a random one. Thus, it is the users themselves that are split into controls and variants. Data is collected from how users interact with the version of the page they are assigned to and compared.

In SEO A/B testing, pages are split into two sets—controls and variants. Changes are made only to the variant pages, and data collected are compared to a previously determined performance forecast.

3. Versions of The Pages

CRO A/B testing requires two versions of the same page to be live at the same time so data can be collected and compared.

In SEO split testing, there is only one version of each page at any time. With Google as the determiner of how these pages will rank, duplicated content (which Google is against) is not encouraged.

In summary, there are several key differences between CRO and SEO A/B testing. Both tests are important steps in improving online performance but target very different metrics.

| CRO A/B Testing | SEO A/B Testing |

| Each individual page has multiple variations: a control, and at least one variant | Multiple pages within a family of pages are grouped into either: the control, or the variant. There is only ever one version of each individual page |

| Optimizes for visitors once they are on the page by improving page usability and user-experience | Optimizes for Google (and searchers) by improving technical aspects that will increase their position in the SERPS |

| Aims to improve the number of people converting once they are on the page | Aims to increase traffic |

| Uses Google Optimize and Optimize 360 to check for statistical significance | Forecasts traffic and then checks for statistical significance against forecasting using Google Analytics/ GA 360 |

Why Should You Do CRO A/B Testing?

The main reason why CRO tests are important is that they allow marketers to test the best possible alternative that has the potential of bringing in the highest possible conversion rate by investing less.

For example, when you exit from the “cart” page of an online ordering portal without placing the actual order, it leads the website or product developer to think what led you to do so. Was it loss of interest? Did a time lapse occur? Was the product costlier than what you had budgeted for? Or was it just the whole ordering process? Unless a test is conducted, you will never be able to gather information about and analyze customer behavior to assess the conversion rate on your website.

Testing takes the guesswork out of website optimization and enables data-informed decisions that shift business conversations from “we think” to “we know.” By measuring the impact that changes have on your metrics, you know what works on your website.

It’s the perfect method to improve conversion rate, increase revenue, grow your subscribers base, and improve your customer acquisition and lead generation results.

3 Top CRO-Worthy A/B Testing Software

Running a successful A/B test requires a data-backed hypothesis, an effective A/B testing tool, and a system to gather feedback at your disposal to run and analyze the results accurately.

In this part, we introduce 3 free and paid CRO-worthy A/B testing software for you.

So, let’s crush this.

1. Google Optimize: Begin Your A/B Test with Google’s Free Tool

Google Optimize is a split testing tool provided with Google Analytics. If you are looking for a powerful AB testing solution with no monetary cost, look no further.

Google Optimize is a useful A/B testing tool where you can create, test, and analyze various versions of your website. You can also conduct simple split URL tests and multivariate tests as desired.

Along with testing capabilities, it also offers server-side testing, customizable URL rules, experience personalization, and more, so you can test and deliver online experiences to engage visitors.

Google Optimize Main Features:

- You can run A/B tests, Multivariate tests, Split URL tests, and Redirect tests using Google Optimize.

- Optimize also has a WYSIWYG-editor so that you can create and deploy your variations quickly for any device.

- You can segment your audience according to the source, device, location, etc., to personalize their experience.

- It lets you set objectives and goals to measure your hypothesis’s success as you run the test.

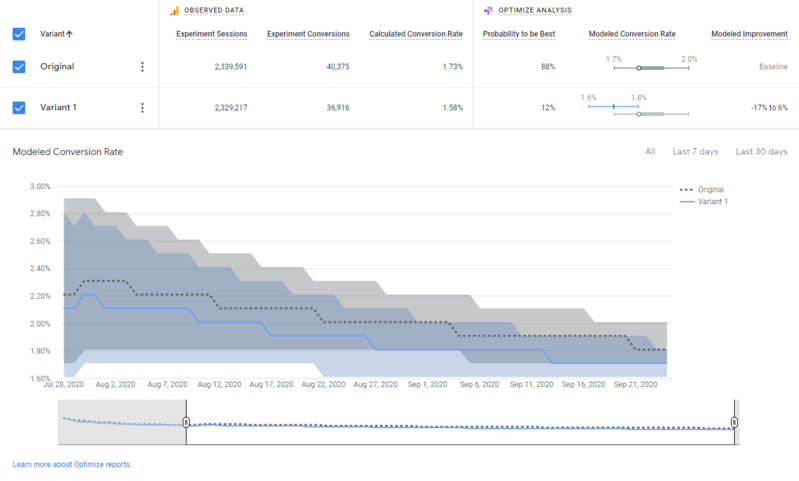

- Google Optimize’s analytical engine utilizes Bayesian inference to show you the real-time performance of your variations compared to the control, such as success probability variants, conversion rate comparison, statistical significance, confidence level, etc.

- Optimize is also natively integrated with Google Analytics so that you can get real-time insights about visitor behavior, website performance, audience segmentation, traffic sources, etc.

2. Optimizely – Enterprise A/B and CRO Tools at Competitive Prices

For larger enterprises, Optimizely is often the preferred choice for top-of-the-line A/B testing tools.

Using their powerful A/B and multi-page experimentation tool, you can run multiple experiments on one page at the same time, allowing you to test various variables of your web design.

Optimizely also offers testing on dynamic websites, various experiment dimensions like an ad campaign, geography, and cookies, and various experiment segmentation parameters like device, browser, and campaign.

Optimizely Main Features:

- Efficiently run A/B tests and multi-page tests.

- It offers a powerful visual editor to build and test variations.

- You can allocate traffic to the variations manually or use the Stats Accelerator to distribute traffic depending on visitor behavior automatically.

- Recommendation engine: setup recommendations and test out different algorithms

- It includes options such as: custom targeting, prioritizing the audience, custom templates, a visual editor, developer tools, and APIs.

3. VWO – A Lot Like Optimizely but A Lot More Budget-Friendly

VWO ((Visual Website Optimizer) is also a popular A/B testing tool in the marketing space. In addition to serving as a top choice for businesses with smaller budgets, it is also frequently used in conjunction with Optimizely by businesses who run complex testing campaigns.

VWO Main Features:

- Perform A/B tests, Multivariate tests, and split URL tests to test your variations.

- It offers session recording, heatmaps, on-page surveys, etc., to get real insights about visitor behavior and experience.

- Its easy-to-use visual editor lets you create test variations in minutes.

- Allows visitor segmentation like mobile vs. desktop traffic or new vs. returning visitors, or you can use custom dimensions to create new segmentations.

- Integrations available: Google Analytics, Kissmetrics, Demandware, Magento, and others.

How to Run an A/B Test On Your Website?

Regardless of which A/B testing tools you are using or which object you are testing, any A/B test in the world follows similar patterns:

Step 1. Define The Core Business Goals

Just like doing anything in life, you need an explicit goal to back the later testing decisions. Your goals are the metrics that you are using to determine whether or not the variation is more successful than the original version. Goals can be anything from clicking a button or link to product purchases and e-mail signups.

And for the most part, you should be looking at the following A/B testing metrics:

- Solving UX issues and common visitor pain points

- Improving performance from existing traffic (higher conversions and revenue, improve customer acquisition costs)

- Increasing overall engagement (reducing bounce rate, improving click-through rate, and more.)

Step 2. Selecting What to Test

When it comes to optimizing your website’s conversion rate, your testing options can be grouped into 3 major types based on your goal:

- Element testing: Individual elements within a page. E.g.: Headline, buttons, copy, etc.

- Page testing: Combinations of many elements. E.g.: Page layouts.

- Visitor flow testing: The action you expected visitors to take upon entering your website. E.g.: Multi-step checkout vs Single-step checkout.

Based on research and practical experiences, you decide what to test to improve your website conversion rate.

Step 3. Observe and Formulate Hypothesis

An A/B test starts by identifying a problem that you wish to resolve, or a user behavior you want to encourage or influence. Once identified, the marketer would typically conclude a hypothesis – an educated guess that will either validate or invalidate the experiment’s results.

A hypothesis typically looks like this:

“If adding a social proof badge to the product details pages, then the conversion rate will increase by 10% because it informs visitors of the product’s popularity.”

As you see, a proper hypothesis provides you with a decent overview of what would be changed, what’s the impact of the changes and the mechanism behind the impact.

Step 4. Create Variations

The next step in your testing program should be to create a variation based on your hypothesis, and A/B test it against the existing version (control). A variation is another version of your current version with changes that you want to test. You can test multiple variations against the control to see which one works best.

Step 5. Running The Test

Once the variations are ready, you launch these variations to exactly the same environment to start the test. Depending on the nature of the piece, your site traffic, and the statistical significance that needs to be achieved, the test could take anywhere from a few hours to a few weeks.

Step 6. Evaluating and Concluding

Using your pre-established hypothesis and key metrics, it’s time to interpret your findings. Keeping confidence levels in mind as well, it will be necessary to determine statistical significance with the help of your testing tool.

If neither of the variations produced statistically significant results– that is, the test was inconclusive– several options are available. For one, it can be reasonable to simply to keep the original variation in place. You can also choose to reconsider your significance level or re-prioritize certain KPIs from the context of the piece being tested. Finally, a more powerful or drastically different variation may be in order.

Tips and Best Practices for A/B Testing

Below are several best practices for A/B Testing that can help you avoid running into trouble.

- Ensure data reliability for the A/B testing solution

Conduct at least one A/A test to ensure a random assignment of traffic to different versions.

- Test one variable at a time

This makes it possible to precisely isolate the impact of the variable. If the location of an action button and its label are modified simultaneously, it is impossible to identify which change produced the observed impact.

- Adapt number of variations to volume

If there is a high number of variations for little traffic, the test will last a very long time before giving any interesting results. The lower the traffic allocated to the test, the less there should be different versions.

- Segment tests

In some cases, conducting a test on all of a site’s users is nonsensical. If a test aims to measure the impact of different formulations of customer advantages on a site’s registration rate, submitting the current database of registered users is ineffective. The test should instead target new visitors.

- Let tests run long enough

Even if a test rapidly displays statistical reliability, it is necessary to take into account the size of the sample and differences in behavior linked to the day of the week. It is advisable to let a test run for at least a week—two ideally

- Wait to have a statistical reliability before acting

So long as the test has not attained a statistical reliability of at least 95%, it is not advisable to make any decisions. The probability that differences in results observed are due to chance and not to the modifications made is very high otherwise.

- Know when to end a test

If a test takes too long to reach a reliability rate of 95%, it is likely that the element tested does not have any impact on the measured indicator. In this case, it is pointless to continue the test, since this would unnecessarily monopolize a part of the traffic that could be used for another test.

- Take note of marketing actions during a test

External variables can falsify the results of a test. Oftentimes, traffic acquisition campaigns attract a population of users with unusual behavior. It is preferable to limit collateral effects by detecting these kinds of tests or campaigns.

Wrapping Up

A/B testing is one of the most powerful and effective ways to improve conversion rate. After reading this comprehensive piece on A/B testing for optimizing conversion rate, you should now be fully equipped to plan your own optimization roadmap.

If you found this guide useful, spread the word and help fellow experience optimizers A/B test without falling for the most common pitfalls. Happy testing!