11 Best Practices of Google Play Store Listing Experiments (A/B Test)

A/B testing is an essential part of an App Store Optimization strategy. For Android developers, Google Play Store Listing Experiments is a prominent tool for store listing A/B testing. It is free. It enables developers to run well-designed and well-planned A/B tests to find the most effective graphics and localized text which can lead to higher conversion rate and more downloads.

However, there are many instances where tests have been improperly run. In this post, we summarized 11 best practices of running Google Play Store Listing Experiments correctly the increase conversions.

Let’s dive in.

Contents

- What is Google Play Store Listing Experiments?

- Google Play A/B Testing Best Practices

- 1. Determine A Clear Objective

- 2. Target the Right People

- 3. Test Just ONE Variant at A Time

- 4. Test ONE Elements at A Time

- 5. Prioritize Visuals Over Words

- 6. Don’t Test Worldwide

- 7. The Experiments Should Use the Largest Possible Test Audience

- 8. Test For At Least 7 Days, Even If You Have A Winning Test After 24 Hours

- 9. Running A / B / B Tests to Flag False Positive Results

- 10. Don’t Apply a Google Play Winning Test On iOS

- 11. Monitor How Your Installs Are Affected After Applying a Wining Test

- Should You Still Test Feature Graphic?

- Summary Should You Rely on Google Play A/B Test Results?

What is Google Play Store Listing Experiments?

Google Play Store Listing Experiments is Google’s free tool for app developers to test their Google Play Store Listing creatives with the goal of improving Google Play conversion rates. By using Google Play Store Listing Experiments you can run experiments to find the most effective graphics and localized text for your app.

You can run experiments for your main and custom store listing pages. Specifically, for each app, you can run one default graphics experiment or up to five localized experiments at the same time. The test can focus on a variety of aspects of the store listing from long descriptions, short descriptions, feature graphics, videos, icons, and screenshots.

Google also allows you to run Experiments on custom store listings. Custom Store Listing is a feature in the Google Play Developer Console that allows you to customize your Google Play Store Listing to target a country or a group of countries. This is opposed to the Localized Store Listing that targets by language, and not a geographical location.

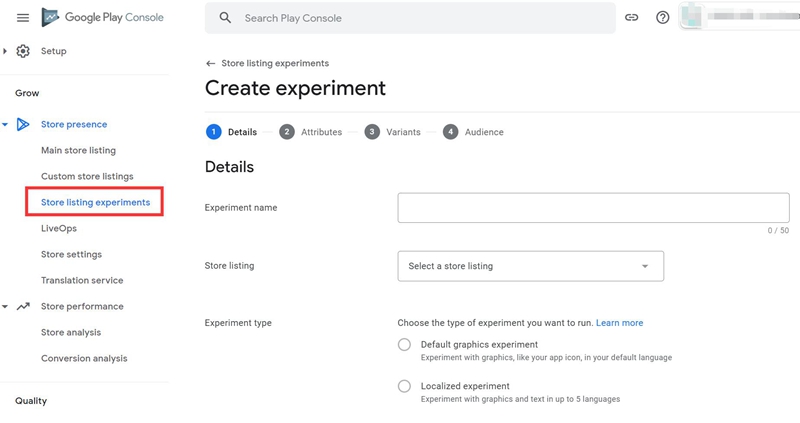

You can create an experiment using Play Console.

Default Graphics

- Sign in to Play Console.

- Select an app.

- On the left menu, click Store presence > Store listing experiments.

- Click New experiment.

- Under “Default graphics,” click Create.

- Set up your experiment.

Localized

- Sign in to Play Console.

- Select an app.

- On the left menu, click Store presence > Store listing experiments.

- Click New experiment.

- Under “Localized,” choose a language.

- Click Create.

- Set up your experiment.

Store listing experiment performance can be measured using either first time installer or one day retained users metrics. These metrics are reported hourly, with the option to get email notifications when the experiment completes.

Google Play A/B Testing Best Practices

1. Determine A Clear Objective

Generally, the first stage of A/B testing is deciding on what you would like to test. Whatever you’re testing should lead to a greater goal such as higher conversion rates.

Then, A/B testing should begin with a hypothesis. For example, “we will improve conversion rate if we change our app’s icon from blue to yellow”. This helps us narrow our scope to just the app icon and not a huge overhaul of the app which should be tested more carefully and with smaller testing groups.

If you still have no a clear objective, you can start by identifying a problem that you want to solve.

2. Target the Right People

App store experiments need traffic for statistically significant results. What’s behind it isn’t just numbers. They are real people or users exposed to the app, and test results are the outcomes of human interactions. Sadly, sometimes we forget who sees our tests. Sometimes, we let the wrong ones in.

For example, many people will set “EN-US” as the primary language and then, they wrongly think that when creating an experiment for this language means their target audience are Americans. It is true when you only launch your app in US. Otherwise, your users may come from many other countries you have launched your app.

Another example, if you set a localized experiment for “French (France) – fr-FR”, your users may not only from France, but many other countries that French is widely used.

If you want to target audience with specific country and specific language, you can create “custom store listing” and run A/B test for the list.

Within the same language, sometimes your app may reach different audiences from different countries with varying cultural settings. You should determine your target audiences before launch a test. Make sure your tests match your target audience well.

3. Test Just ONE Variant at A Time

If more than one thing changes in a single variant, it will be difficult to determine which change users are reacting well to. For instance, you can test a different set of screenshots against each other, but if one set also includes a different icon, any changes to conversions could be due to either one of those.

4. Test ONE Elements at A Time

What is it that you want to compare? Identify a problem you’d like to solve. It may be an element of design, copy, or function.

For example, if you want to test which feature attracts your users most, you can change the order of your screenshots for different features as your first screenshot. However, you should not change the order and the design of the screenshot at the same time. Otherwise, you cannot judge is the feature or the design works.

5. Prioritize Visuals Over Words

Based on my personal trials and many conversations with colleagues in the industry, everybody agrees that the texts (long description and short description) have less effect compared to the visuals. This means that you should prioritize to test icon, screenshots and feature graphics instead of testing descriptions at first.

6. Don’t Test Worldwide

You will be surprised how differently users respond between countries. It is hard to find what works and what’s not for people from all over the world.

Pro Tips: If you launch your app or games in many countries, you should localized your app store listing.

7. The Experiments Should Use the Largest Possible Test Audience

Lower traffic means it’s harder to get high statistical significance. Therefore, when running test, you’d better set up a 50% split between two different versions, or a 25% split if four variants are being tested.

A large audience portion means you can reach a greater number of users for results that more accurately reflect the overall userbase, while an even split means it will be easier to see how each version is being received by those who see it.

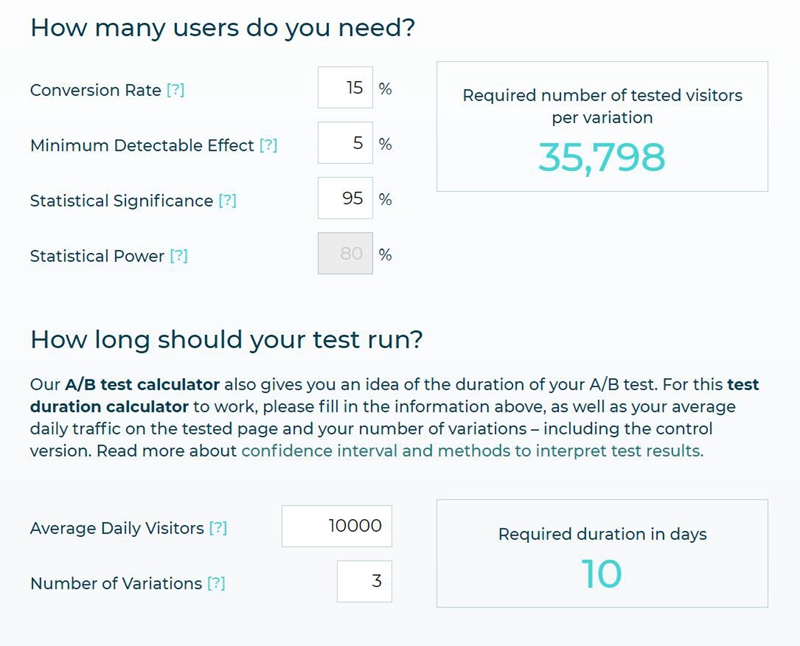

Some people claim 1,000 or 2,000 users is enough for the test. For me, results start to stabilize after 8,000 or 10,000 installs per variation (current installs, not scaled installs).

In order to make a test efficient, you should send as many as users as possible to the test. If your daily visitors are less than 500, you can run the test for more days to get enough statistics.

You can use the sample size calculator that shows how many users you need in order to get the results you want from your experiment

8. Test For At Least 7 Days, Even If You Have A Winning Test After 24 Hours

Every time you run an experiment, Google play will tell you the experiment is complete after a few days. If you have huge daily visitors, you can even get a result after 24 hours.

“Wow! How fast is it!”, we thought. “We can test a lot of ideas in a single month and come to a decision very quickly!”. We were really impatient to complete all the tests.

As developers, we’re all eager to find quick results and apply them as soon as possible so we can have more installs and conversions immediately. But rushing things here may certainly take you to the wrong place.

Google suggests run the test at least 7 days. They are right.

Running for a week allows you to capture both weekday and weekend behavior, even if you have enough traffic to get meaningful results in a few days. It’s crucial to make sure users aren’t biased by weekdays or weekends, seasonal events, holidays and more. You should run the test for more days to make the results stable.

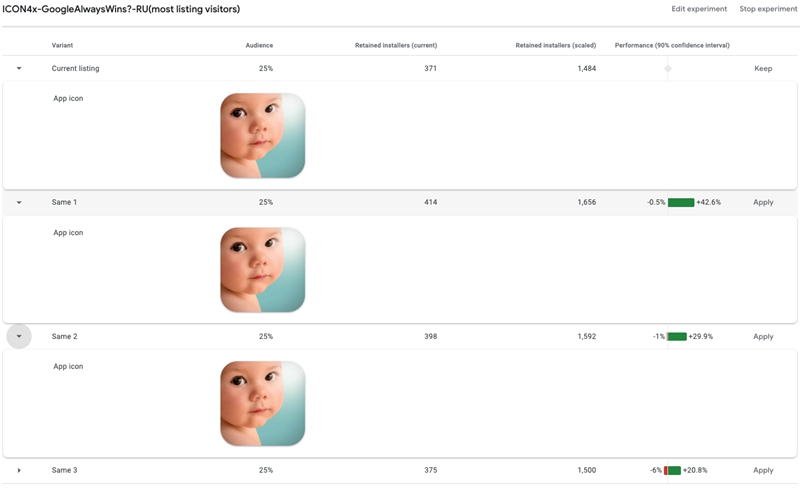

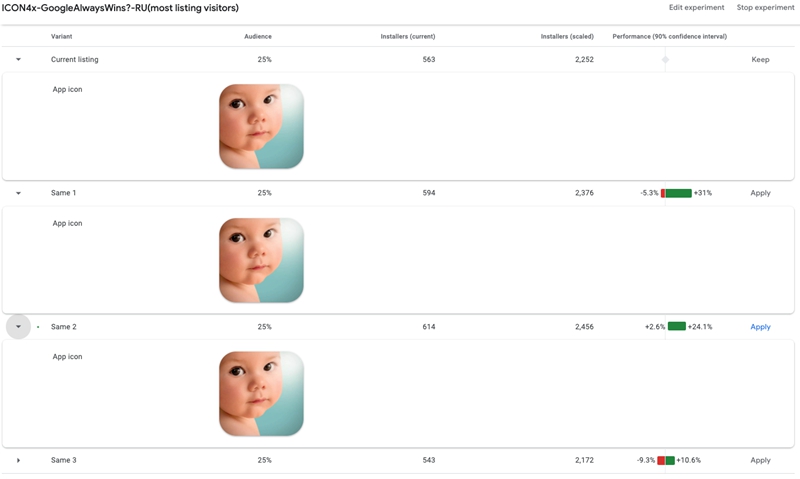

9. Running A / B / B Tests to Flag False Positive Results

Google Play Store Experiments all rely on a statistical model called the 90% confidence interval, which means is below the standard of 95% confidence interval used in most A/B tests.

90% statistical significance often means 10% error margin. It’s a lot.

That’s why you should always test your assets twice or run a A/B/B test. Creating two of the same B variants in the test will help assess if results are likely to be true of false positive: if both B samples provide similar results, then these are likely true positive, whereas if they have different results, one of the two is likely a false positive.

10. Don’t Apply a Google Play Winning Test On iOS

Due to there is no A/B test tool in App Store, it is common that some use a Google Play store asset to test an idea, then duplicate the same asset for the App Store after validating the idea.

However, iOS and Android users are different, with different expectations and attitudes, which separate their download intents and habits. Moreover, different stores have different layouts and asset compositions. What seems prominent on the Google Play may be hard to notice on App Store.

Therefore, you should not apply a Google Playing winning test on App Store.

11. Monitor How Your Installs Are Affected After Applying a Wining Test

Even if the experiment result states that a test variant would have performed better, its actual performance could still differ, especially if there was a red/green interval.

So, after applying the better test variant, keep an eye on how your installs are affected. The true impact may be different than predicted.

Should You Still Test Feature Graphic?

Since the Google Play Store redesign in 2018, the feature graphic is now not displayed at the top of an app’s store listing. If you have a promo video, your feature graphic will be used as the cover image with a Play button overlaid on top.

Then, is the feature graphic still relevant for the A/B testing for apps without videos listed in the play store?

Many ASO participator think we should because the feature graphic can also be displayed in the Ads section on the Play Store and the “Recommended” section on the Play Store.

However, in my opinion, it makes no sense. We should A/B test the features graphic, but we should not test feature graphic with Google Play Store Listing Experiments.

I wouldn’t test it on Google play because if I don’t have an app preview video. Feature graphics is not an asset visible by the user on the app page. With Google Play Store Listing Experiments, we cannot only choose the UAC users to participate the test. Then, the feature graphic A/B testing is near useless because the differences in performance between A and B will come more from differences in user profiles between the two samples than actually people seeing the store listing page.

Summary Should You Rely on Google Play A/B Test Results?

Partly.

If you run A/A, B/A, A/B/B test, sometimes you may find completely different results for the same exact experiment. Here is an example.

There are 3 factors that can hugely affect your test results.

- The sample is too small. As the example shown above, the sample users in the test are too small to conclude anything.

- User download behavior may vary substantially for different days of the week (for example, on weekends people may be more willing to download a new app without caring so much about its icon or screenshots). Some developers run a test shorter than 7 days, so they are more likely get wrong results.

- Store Listing Experiments operate with 90% confidence that the results are correct. It means that there is 10% chance the result is wrong.

Then, how to improve the credibility of Google Play experiments? – Just strictly follow the best practices of A/B test in Google Play listed in the post.